Clustering¶

A wrapper function (cluster) is available for the user’s convenience,

it takes all arguments available to the clustering object and will output the

membership assignment.

To have more control over the algorithm, one can initialize the clustering object and call the iteration by themselves.

from raccoon.clustering import iterativeClustering

obj = IterativeClustering(data, **kwargs)

obj.iterate()

IterativeClustering takes an initial matrix-like object

(rows as samples, columns as features), and some optional flags,

including a matching list of labels

for plotting (labs).

It contains information on the chosen clustering options and a one-hot-encoded pandas data frame containing structure and assignment of the hierarchy of identified classes.

obj.clusOpt

The numerous flags available to IterativeClustering

allows for great flexibility in the clustering analysis.

Among these we find

|

sets the maximum depth at which the clustering is allowed to go,

if |

|

if active, trained maps, preprocessing status and feature filters will be saved to disk. |

|

set the path to a directory where all the plots and output data will be stored. |

For the full list of available options and their use, see API.

Normalization¶

As a first step in the clustering analysis, the data can be normalized by activating the

norm flag in the iterative clustering object. It takes default sklearn

norms, and is set by default at 'l2'. If None, no normalization will be applied before the features removal step.

Optimizers¶

There are currently two optimizers implemented, to be set with the optimizer flag.

A third option, tpe allows you to use Tree-structured Parzen Estimators

with Optuna.

|

Grid Search: given a set of parameters ranges and steps, the tool will evaluate all possible combinations on the defined mesh |

|

Differential Evolution: a simple evolutionary algorithm, it requires setting a number of candidates for the parametric space search and a number of maximum iterations [Storn1997] |

|

candidates for the parametric space search. |

While Grid Search requires defining the exact set of points to explore, either directly

or indirectly by setting the explorable ranges and steps for each parameter (see the next sections),

Differential Evolution requires some candidate points to be set with search_candid

and maximum iterations search_iter which will stop the optimization unless a solutions

improvement tolerance limit (set by default) is hit.

Warning: These two flags will override the parameters selection flags specific to each of the downstream steps. It will only keep information on their ranges (if set), to define the boundaries.

While Differential Evolution is in principle more efficient than Grid Search, it is not guaranteed to find the absolute minimum, and the results heavily depend on how extensive the search is. For exploratory runs, with a low number of search points, Grid Search is preferred. Differential Evolution should be the default choice when resources for a detailed search are available.

Selecting tpe will allow you to run a Tree-structured Parzen Estimators search with Optuna.

As for DE, the number of maximum candidate points to evaluate can be set with search_candid,

but the search will stop automatically after a few iterations if a plateau is reached.

A fourth option auto, will automatically switch between grid search and differential evolution

depending on the size of the dataset at each iteration step and, consequently, the candidates pool.

The dynamic mesh with dyn_mesh needs to be active when

this option is chosen.

Dynamic Mesh¶

The iterative search can easily become cumbersome with large datasets.

Decreasing the number of explored points during the optimization, however, may hinder

the convergence towards a point of absolute optimality and lead to the loss

of important details.

For this reason, we implemented a dynamic mesh, which automatically attempts to adapt

the granularity of the search at each iteration.

By setting dyn_mesh as True, the number of grid points (or candidates

and iterations in the case of DE) are calculated as a function of the population of the

investigated cluster.

Warning: Activating this function will override the chosen step or candidates/iteration numbers.

Objective Function¶

To drive the optimization, different clustering evaluation scores can be used

|

Silhouette Score: measures the ratio of the intra-cluster and inter-cluster distances, it ranges between 1 for optimal separation and -1 for ill-defined clusters. [Rousseeuw1987] |

|

Dunn Index: is the ratio of the minimum inter-cluster distance and the maximum cluster diameter. It ranges from 0 to infinity. [Dunn1973] |

These scores require measuring the distances between points and/or clusters

in the embedded space. With metricC, one can select which metric to use.

Standard measures, as implemented in sklearn

(e.g. 'euclidean' or 'cosine') are available.

When 'silhouette' is selected, its mean value on all data points is maximized.

To assure quality in the library’s outputs, sets of parameters

generating a negative score are automatically discarded.

Warning: the current implementation of the Dunn Index is not well optimized, avoid it unless necessary.

Alternatively, a custom scoring function can be provided. It must have compatible format, following that o of scikit-learn’s :code: silhouette_score. It should take as inputs a feature array and an array-like list of labels for each sample. It should also accept a scikit-compatible metric with the :code: metric flag.

Here is an example with the Rand index:

def arand_score(points, pred, metric=None):

""" Example of external score to be fed to raccoon.

Follows the format necessary to run with raccoon.

Args:

points (pandas dataframe, matrix): points coordinates, will be ignored.

pred (pandas series): points labels obtained with raccoon.

metric (string): distance metric, will be ignored.

Returns:

(float): the adjusted Rand Index

"""

return arand(truth.loc[pred.index], pred)

Please note that the Rand Index requires a ground truth Series to be compared with the results and will not use the position of the data points (athough it still needs to be provided to the function).

raccoon maximizes the objective function so make sure the direction

of your objective function is correct. A custom baseline score can be provided

with baseline.

Population Cutoff¶

The iterative search will be terminated under two conditions: if the optimization found a

single cluster as the best outcome or if the population lower bound is met.

The latter can be set with pop_cut and should be kept as high as possible to avoid

atomization of the clusters, but low enough to allow for the identification of subclasses.

(depending on the dataset, the suggested values are between 10 and 50).

Low-information Filtering¶

These are the methods currently available for low-information features filtering:

|

Variance : after ordering them by variance, remove the features that fall below a cut-off percentage of cumulative variance |

|

Median Absolute Deviation: like |

|

Truncated Single Value Decomposition: applies t-SVD to the data, requires to set the number of output components [Hansen1987] |

Although this step is not strictly necessary to run UMAP, it can considerably improve the outcome of the clustering, by removing noise and batch effects emerging in the low information features.

All these methods are set with filter_feat and require a cutoff, a percentage of the cumulative variance/MAD to be removed, or

the number of output components in t-SVD.

This is a tunable parameter and is part of the optimization process, its range and step

can be set with ffrange and ffpoints respectively.

For example, setting

filter_feat = 'MAD'

ffrange = 'logspace'

ffpoints = 25

will run the optimization on a logarithmic space between .3 and .9 in cumulative MAD with 25 mesh points.

This step can be skipped by selecting ‘variance’ or ‘MAD’, while setting the percentage of cumulative variance to be kept at 100% as the only explorable point.

filter_feat = 'variance'

ffrange = [1]

Non-linear Dimensionality Reduction¶

Following the low-information features removal is the dimensionality reduction through UMAP.

Here there are several flags that one could set, mostly inherited by UMAP itself, the

most important being dim, the dimensionality of the target space.

One should take particular care in choosing this number, as it can affect both

the results and the efficiency of the algorithm. The choice of metric for the objective

function will also depend on this value, as 'euclidean' distances are only viable in two

dimensions.

We suggest you leave the choice of mapping metric (metricM), the number of epochs (epochs)

and learning rate (lr), to their default values unless you know what you are doing.

Finally, as in the case of the features removal step, the number of nearest neighbours,

which defines the scale at which the dimensionality reduction is performed, is left as tunable

by the optimizer. You can choose the range and the number of points (if Grid Search is active) with

nei_range and nei_points respectively.

If the range is left to be guessed automatically, for example as a logarithmic

space based on the population ('logspace'), a factor can be set to reduce the

value proportionally (nei_factor) in the presence of particularly large datasets,

as high values of these parameters can impact the performance considerably.

This choice is crucial for a proper analysis, make sure to know your dataset well and run preliminary analyses if necessary.

A custom function that calculates the number of neighbors (or a range) from the population

can be also provided to nei_range. The function needs to take one argument, the subset population,

from which the parameter will be calculated and must return a single value or a list of values.

The population will be multiplied by nei_factor before being passed to this function and the

provided values will be cast to integer.

A simple example of this would be the square root function.

from math import sqrt

nei_range = sqrt

Or a more complicated one, a small range around the square root.

from math import sqrt

def range_sqrt(n):

sq = sqrt(n)

return [sq/2, sq, sq+sq/2]

nei_range = range_sqrt

If the dimensionality of the target space corresponds to the dimensionality of the input space

(after the low-information filter), this step will be skipped by default. This helps speeding

up the process by avoiding unnecessary calculations and it can be used in those cases where you

want to avoid running the dimensionality reduction step on your data.

If for any reason you still want to transform your data, you can set 'skip_equal_dim'

to False.

When activated, flag skip_dimred will allow you to skip this step completely.

Clusters Identification¶

The clusters identification tool is chosen with the clu_algo flag

|

Density-Based Spatial Clustering of Applications with Noise: density-based clustering, requires an \($\epsilon$\) distance to define clusters neighbourhood [Ester1996] |

|

Hierarchical DBSCAN: based on DBSCAN, it attempts to remove the dependency on \($\epsilon$\) but is still affected by the choice of minimum cluster population [Campello2013] |

|

Shared Nearest Neighbors: it accounts for clusters of varying density by building an adjacency matrix based on the number of neighbours within a given threshold. This implementation relies on DBSCAN to find the clusters from the similarity matrix. [Jarvis1973] |

|

Louvain Community Detection: this is an algorithm devised for the identification of communities in large networks. This implementation is based on the adjacency matrix calculated with SNN. [Blondel2008] |

Depending on which method has been chosen, different parameters are set as tunable for

the optimizer (e.g. \($\epsilon$\) for DBSCAN or minimum population for HDBSCAN).

With cparm_range one can set the range to be explored. By default, this is set

as guess which allows the algorithm to find an ideal range based on the elbow method.

If 'DBSCAN' is chosen as clustering algorithm, its minimum value of cluster size can also be set

with min_csize.

This step is also affected by the choice of metricC as distances need to be measured

in the embedded space.

For those clustering algorithms that allow discarding points as noise, the outliers

flag allows the user to chose what to do with these points:

|

points marked as noise will be left as such and discarded at the next iteration. |

|

attempts to force the assignment of a cluster membership to all the points marked as noise by means of nearest neighbours. |

Given that this step is in most cases considerably less expensive than the other two, and that the DE algorithm efficacy is considerably reduced above 2 dimensions, the search for this parameter is set by default as a Grid Search with fine mesh.

Transform-only data¶

Occasionally you may want to train your clusters only on a subset of the data, while still use them to classify some held-out set.

By setting transform you can ask the algorithm to run each one of the clustering steps

iteratively only on a given subset, while still forcing the membership assignment with k-NN

to the rest of the data.

The full dataset has to be given as input, including the data to project, but not used in the training.

transform takes a list-like object containing the indices of the points not to be used

for the training.

Activating this function will produce extra plots at each iteration, of projection maps colour-coded according to which points were used for the training and which transformed only.

Supervised clustering¶

The supervised boolean flag activates supervised dimensionality reduction with UMAP. When this flag is active, class labels need to be provided in labs

and are used to guide the training of the lower dimensionality spaces. You can tune how much the supervised information will affect the training with supervised_weight, which corresponds to the target_weight flag in UMAP. This is to be set to 0.0 to ignore the labels, or 1.0 to fully rely on them. By default, it is set as 0.5.

Saving hierarchy information¶

The resulting clustering membership will be stored as a one-hot-encoded pandas data frame in the obj.clusOpt variable.

However, auxiliary functions are available to store the hierarchy information as an anytree object as well.

import raccoon.utils.trees as trees

tree = trees.buildTree(obj.clusOpt)

buildTree requires the membership assignment table as input and optionally a path to where to save the tree in json format.

By default, it will be saved in the home directory of the run.

To load a tree from the json file loadTree only requires its path.

Plotting¶

Each run will produce a series of plots, which can be found in the raccoon_plots folder.

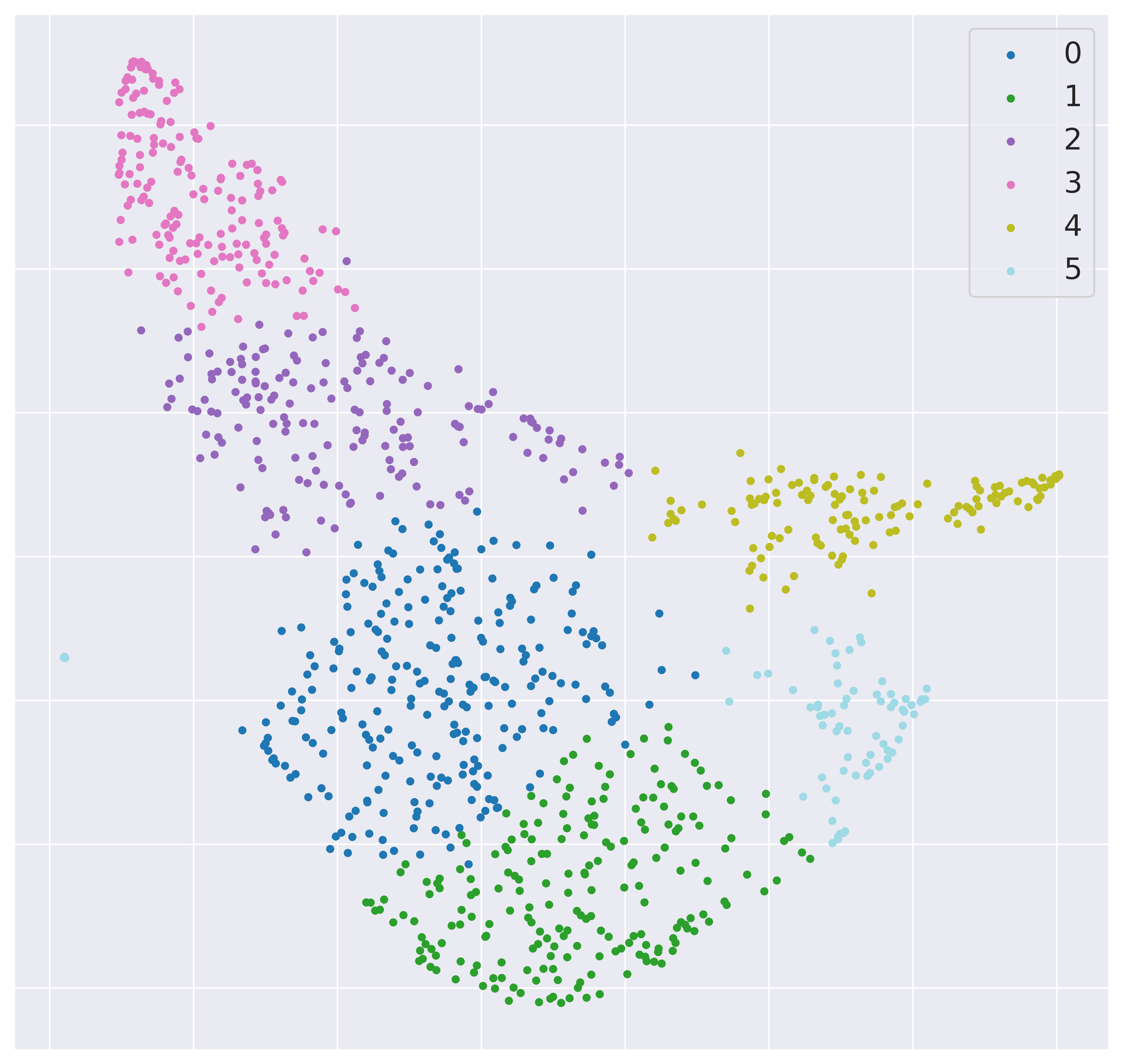

These will include 2d UMAP projections of the subset selected at each iteration, colour-coded by class and by label (if provided).

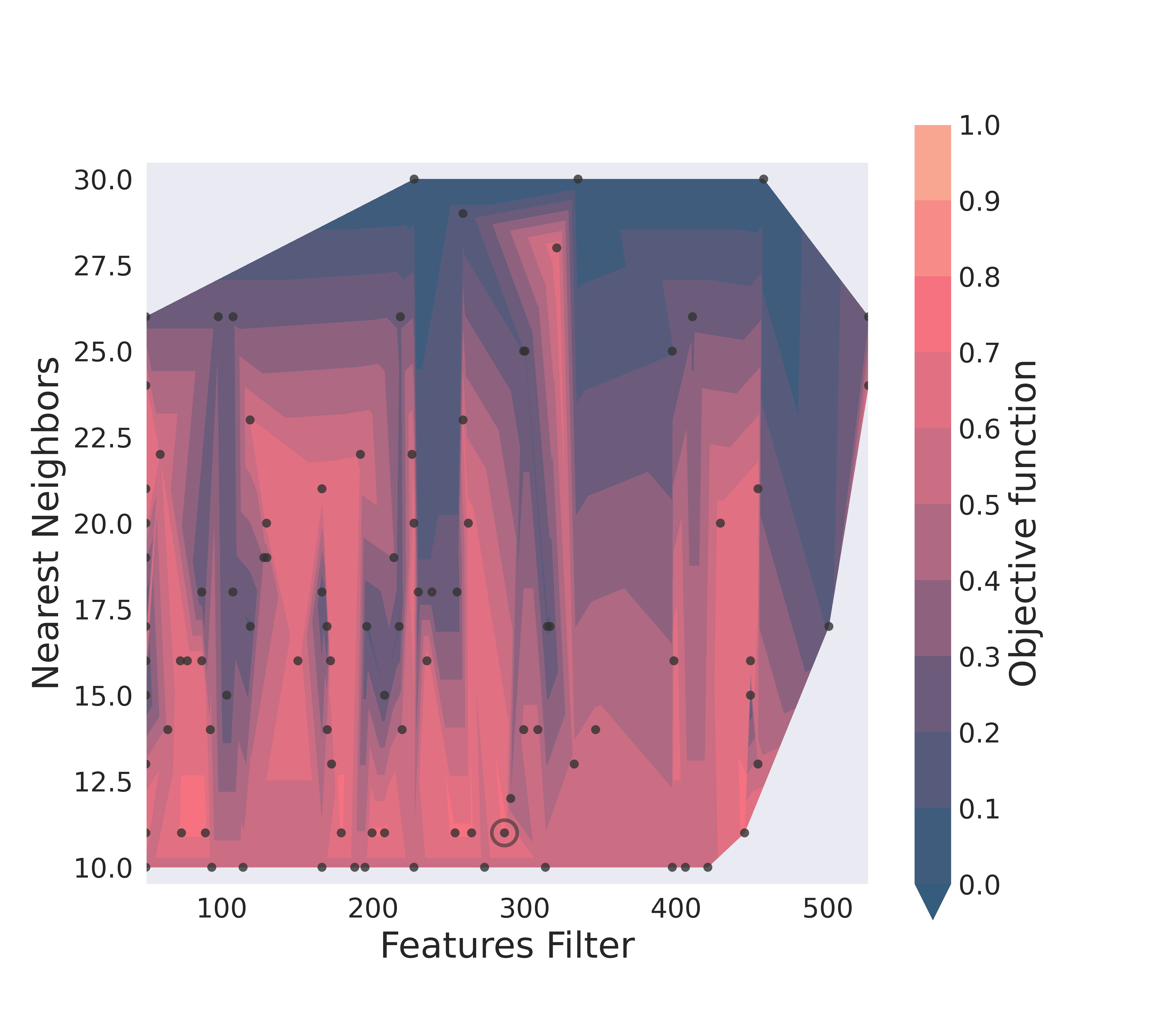

And an optimization surface built from the explored sets of parameters. This plot shows a colour map of the objective function best score as a function of the number of neighbours and the feature filters parameter value. Each set of parameters tested is a dot, the chosen optimal set is circled in black.

Resuming a run and checkpoints¶

It is possible to resume a previously interrupted run (or one which completed successfully in case you want to deepen the hierarchy),

with the wrapper function resume. This takes the same inputs as cluster.

chkpath is needed in its place. This should point to the raccoon_data folder where the instance to be resumed was run.

import raccoon as rc

cluster_membership, tree = rc.resume(data, lab=labels, dim=2, pop_cut=20, max_depth=3,

chkpath='path_to_original_run', save_map=True)

To resume, the original run needs checkpoint files. To create them, activate the chk boolean flag during your original run.

This will automatically build a chk subdirectory in the data folder and populate it with temporary class assignments.

While saving checkpoints may affect the efficiency of the run, it is recommended for

larger jobs to avoid losing all progress if something were to go wrong.

When resuming a run, all new data will be saved in the original directory tree.

resume takes most of the same arguments as cluster, you are free to change them,

e.g to allow for a finer or deeper search by decreasing pop_cut or increasing max_depth. The algorithm will automatically search for all

candidate classes and extend the search. This includes classes higher up in the hierarchy that fell below the population threshold.

Classes that were discarded as noise by the clustering algorithm or were below the min_csize cutoff

cannot be recovered.

References¶

Storn R. and Price K. (1997), “Differential Evolution - a Simple and Efficient Heuristic for Global Optimization over Continuous Spaces”, Journal of Global Optimization, 11: 341-359.

Rousseeuw P. J. (1987), “Silhouettes: a Graphical Aid to the Interpretation and Validation of Cluster Analysis”, Computational and Applied Mathematics, 20: 53-65.

Dunn J. C. (1973), “A Fuzzy Relative of the ISODATA Process and Its Use in Detecting Compact Well-Separated Clusters”, Journal of Cybernetics, 3: 32-57.

Hansen, P. C. (1987), “The truncatedSVD as a method for regularization”, BIT, 27:,: 534–553.

Ester M., Kriegel H. P., Sander J. and Xu X. (1996), “A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise”, Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining, 226-231.

Campello R. J. G. B., Moulavi D., Sander J. (2013), “Density-Based Clustering Based on Hierarchical Density Estimates, Advances in Knowledge Discovery and Data Mining”, PAKDD Lecture Notes in Computer Science, vol 7819.

Jarvis R. A. and Patrick E. A. (1973) “Clustering Using a Similarity Measure Based on Shared Near Neighbors”, IEEE Transactions on Computers, vC-22 11: 1025-1034.

londel V. D., Guillaume J-L., Lambiotte R. and Lefebvre E. (2008), “Fast unfolding of communities in large networks”, Journal of Statistical Mechanics, P10008.